Emotion Recognition with Biofeedback AI for Therapists: A Breakthrough in Mental Health Care

Last Updated on September 16, 2023

As humans, we respond naturally to our emotions with our thoughts, behaviors, or actions. Emotional responses are ways in which our brain reacts to events happening around us. The quest to understand our emotions better has sparked different scientific research on emotion recognition with biofeedback AI.

In this article, we will be discussing the benefits, challenges and limitations, and future opportunities associated with emotion recognition with biofeedback AI.

Introduction to Emotion Recognition and Biofeedback AI

Emotion recognition refers to the ability to detect different emotions a person expresses through their facial expressions, body language, or voice tone. It involves analyzing the emotional state of a person manually by humans or automatically with software. We express different emotions, such as fear, joy, shock, and disgust, that can be analyzed by emotion recognition.

Emotion recognition is fast becoming an important issue in therapy as emotions affect the decision-making of people. The emotions expressed by the client during therapy and post-therapy are important, and every good therapist pays attention to them.

Emotion recognition with biofeedback AI involves the use of machine learning to analyze physiological data to detect specific emotions of an individual. Physiological signal analysis using biofeedback AI is an effective method used to analyze different human emotions like sadness, anger, anxiety, happiness, etc.

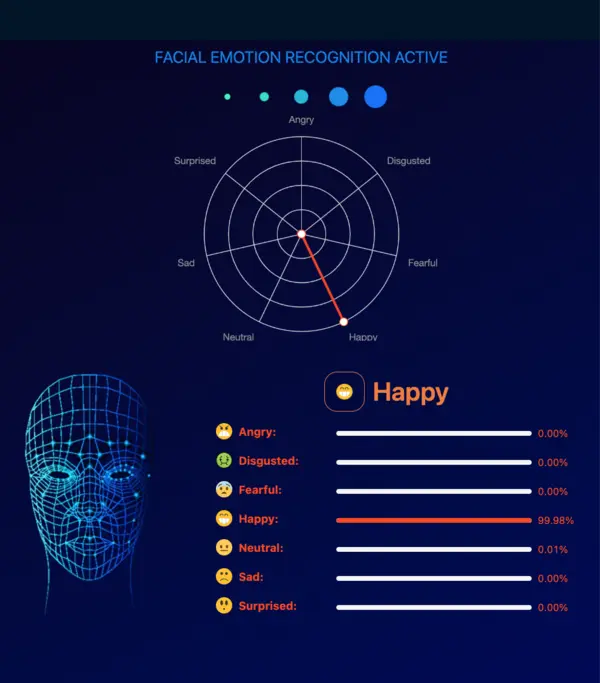

As a therapist, you are also human and can miss or misjudge the emotions expressed by a client because you were taking down a note or calculating a response. With Hypnotes Biofeedback AI, you can detect every emotion your client expresses with facial recognition. Hypnotes Biofeedback AI allows you to monitor the emotions of your client in real time with its image-processing technology.

How Biofeedback AI Works for Emotion Recognition

Biofeedback AI uses Electroencephalography (EEG) signals to receive brain signals and an EEG-based emotion recognition algorithm to identify different emotions in real time.

Electroencephalography (EEG) is a non-invasive technique for analyzing the electrical activity of the cerebral cortex through the recording of electrical potentials on the scalp. In other words, Electroencephalography (EEG) is used to determine emotions by recording the electrical activity in the cerebral cortex, which is responsible for processing emotions and other high-level processes.

According to this research, Electroencephalography (EEG) signals can be received through cameras, sensors, and feedback systems. After recording the physiological signals, machine learning algorithms are used to analyze EEG data and identify the different emotional states of an individual. A good example of an emotion recognition algorithm used is Convolutional neural networks (CNNs), a deep learning algorithm used to analyze EEG signals into different emotional states.

Positive or negative emotions, such as happiness, anxiety, or sadness, can be correlated with changes in electrical activity in the brain. As a therapist, you can receive real-time feedback on the emotional state of the patient based on the EEG data and the emotion recognition algorithm.

Benefits of Using Biofeedback AI in Therapy

The benefits of biofeedback AI in Therapy cannot be overemphasized as it makes healthcare provision better in so many ways. As therapists, the use of emotion recognition with biofeedback AI makes it easy to understand the psychological and physiological information of clients during therapy.

Biofeedback AI detects emotions that you may miss with your eyes. It helps improve the accuracy of your treatment as a therapist by helping you gain a deeper understanding of your client’s mental or physical health. By getting accurate emotions a client expresses, you can understand how the client responds to certain stimuli such as stress, anxiety, and joy.

Using Hypnotes Biofeedback AI during therapy provides identification of the emotional response of a client quickly, thereby leading to faster progress in therapy. The use of biofeedback AI reduces the need for in-person therapy. This is useful for clients with busy schedules.

Emotion recognition with Biofeedback AI provides an interactive and engaging experience for both clients and therapists. With Hypnotes Biofeedback AI, therapists can see the physiological responses of clients in real-time and track their progress over time. In addition, physiological responses can be managed more quickly with biofeedback AI, which can make therapy more effective.

Emotion recognition with Biofeedback AI helps to improve productivity in the workplace by increasing the focus and performance of employees. The use of emotion recognition with biofeedback AI technology in therapy can provide therapists with valuable information and insights that can help them deliver more accurate and effective treatment to their clients.

Examples of Emotion Recognition with Biofeedback AI for Therapists

Emotion recognition with biofeedback AI has a wide range of applications in the world of therapy today, ranging from mood tracking to stress management and then to therapy planning. Biofeedback AI is used in therapy to track the mood and emotional condition of clients over time. The treatment administered to the client will depend on the information gathered from observing the mood of that client.

Stress management is another application of emotional recognition with Biofeedback AI. There are wearable devices that have EEG sensors and are used to receive physiological signals from patients. In order to reduce stress levels and regulate physiological responses, patients can use the feedback gotten from these devices to learn relaxation techniques, such as deep breathing or meditation.

With Biofeedback AI, therapists can tailor the treatment plan for their clients based on individual needs. The information obtained from biofeedback AI is used to make accurate decisions on the effective treatment plan to administer to the patient.

According to this research, emotion recognition is an essential support tool used in therapy. Facial expression analysis is an important factor when dealing with the mental health of a person; therefore, biofeedback tools are necessary for others to have a good understanding of a client’s emotions.

According to the research, poor emotional recognition in the elderly is caused by aging, and it leads to a progression in diseases such as Dementia, Parkinson, and depression. Nevertheless, Facial Expression Recognition with Biofeedback AI makes it possible for therapists to recognize emotions in the elderly in spite of their facial changes.

Examples of Emotion Recognition with Biofeedback AI include the following:

Affectiva

Affectiva is an emotion recognition software that detects different human emotions in real time. It is known as Human Perception AI, and its technology is made up of more than 4 billion frames captured from 8.7 million different faces. Affectiva provides solutions in different areas like market research, media advertisement, and automotive sensing.

Eyeris

Eyeris is a facial recognition AI software for automotive cabin sensing to ensure safer driving. The goal of Eyeris is to make the road safer and also allow drivers the opportunity to multitask. Eyeris is also used to detect a wide range of emotions and give insights into different human behaviors.

EmoVu

Emovu is an AI-driven emotion recognition software that uses deep learning algorithms to detect human emotions and analyze facial expressions. It is designed to work with cameras or video feeds, allowing it to analyze the expressions of people in real time or from recorded footage.

The technology uses machine learning algorithms to detect facial features such as the eyes, mouth, and eyebrows and then analyzes these features to identify emotions such as happiness, sadness, anger, surprise, and disgust. It can also track changes in these emotions over time to provide insights into how people are feeling during a particular event or interaction. Emovu can be used in a variety of applications, including market research, customer experience management, and mental health diagnosis.

Empath

Empath is emotion recognition software that identifies different emotions by analyzing the voice of an individual. Empath software is able to detect emotions such as anger, joy, sadness, calmness, and vigor based on tens of thousands of voice samples. The software is capable of detecting emotions regardless of the language spoken.

Affective Computing

Affective Computing is a field of research defined by Rosalind Picard that involves the use of artificial intelligence and deep machine learning to develop software and algorithms that are capable of recognizing and responding to human emotions. The goal of affective computing is to be able to accurately detect human emotions based on voice tone, facial expressions, and body language. Affective computing has a wide area of usage in different fields, such as therapy, health care, education, and marketing.

Emotion AI

Emotional AI is also known as emotion recognition technology, and it involves the use of artificial intelligence AI for human emotions. The use of Emotion AI helps diagnose and treat conditions such as depression and anxiety. Emotion AI is applied in chatbots, psychology, healthcare, marketing, and education.

Hyptnotes Biofeedback Emotion Recognition

Hyptnotes Biofeedback emotion recognition is an AI-powered facial recognition software used by therapists to monitor the emotions of clients in real-time. Hypnotes AI will revolutionalize therapy by making it easier to understand for clients suffering from different mental health problems to get help. Hynotes emotion recognition with biofeedback AI also offers a voice shareability feature and allows therapists to revisit session recordings for further analysis.

Challenges and Limitations of Emotion Recognition with Biofeedback AI

Emotion recognition with Biofeedback AI software runs on a dataset of millions of different human emotions. Artificial intelligence can only analyze emotions by understanding different human emotions. Nevertheless in real-world applications, AI is not entirely perfect, and so it has its own fair share of drawbacks.

Interpretation: Humans are complex beings that are capable of displaying different emotions, and it can be difficult for AI to understand these emotions based on only facial expressions. In many cases, AI is incapable of recognizing cultural differences in how people express and read emotions, making it a challenge to make accurate judgments.

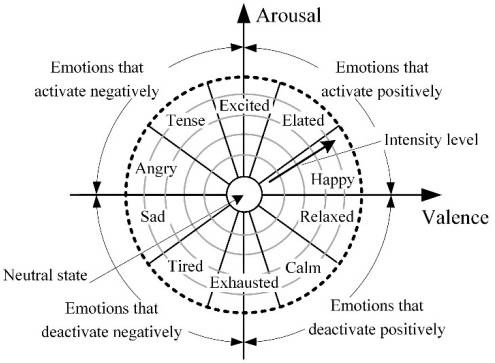

According to the research performed by Feidakis, Daradoumis, and Cabella, where the classification of emotions based on fundamental models is presented, exist 66 emotions which can be divided into two groups: ten basic emotions (anger, anticipation, distrust, fear, happiness, joy, love, sadness, surprise, trust) and 56 secondary emotions. To evaluate such a huge amount of emotions, is extremely difficult, especially if automated recognition and evaluation are required. Moreover, similar emotions can have overlapping parameters, which are measured.

Ethical concerns: Another drawback of emotion recognition with biofeedback AI is the problem of ethical concerns. The potential misuse of data, privacy, and consent are some of the ethical issues associated with emotion recognition with biofeedback AI. In terms of privacy, the biofeedback AI system collects data about the physiological responses of different people, which can be considered an invasion of privacy.

Accuracy: The accuracy of Emotion recognition with biofeedback AI is another consideration, and it depends on a number of factors, such as the quality of sensors used and the complexity of emotional responses. Inaccuracy of emotional recognition can lead to bias and unfair assessment of emotions.

Affective computing typically relies on two types of data: behavioral data, such as facial expressions, body posture, and voice, and physiological data, such as heart rate, skin temperature, and galvanic skin response. While behavioral data has mainly focused on the expression aspect of human emotions, it lacks a deeper understanding of the underlying physiology. Furthermore, behavioral expressions can be either spontaneous or deliberate, making it difficult to objectively assess affective states. In contrast, the physiological-data-based approach can provide a direct measurement of human physiological activities that cannot easily be concealed, and may overcome these issues by providing a more objective assessment of affective states.

Cost: Biofeedback AI technology can be expensive, which may limit its accessibility to certain individuals or organizations.

User comfort: Finally, active emotion-inducing tasks may have additional disadvantages such as causing discomfort or stress to participants This can affect the accuracy of the data collected, as users may alter their behavior or physiological responses due to discomfort or self-consciousness.

Future Directions and Opportunities for Emotion Recognition with Biofeedback AI

By providing accurate measures of human emotions, emotion recognition with biofeedback AI can revolutionalize different fields such as psychology, health care, education, and marketing in our world in the coming years. Emotion recognition is already being used in therapy to treat mental health problems such as depression and anxiety.

Gamers can enjoy a more immersive experience with emotion recognition software. Emotion recognition can be used to adjust the game difficulty according to the emotions expressed by gamers. Emotion recognition with biofeedback AI can also be used in telepathy, allowing people to communicate without actually speaking.

Emotion recognition will play a vital role in advertisement and marketing as it can be used to understand consumer behaviors and satisfaction. This will help advertisement firms streamline their adverts to the right target market because they have a good understanding of a consumer’s emotion towards a particular product.

There are other fields where emotion recognition will find relevance in the coming years. In all, emotion recognition holds a great future in the world as there is room for further development and application in different industries.